Seeing is believing. That’s a truism most of us have held onto forever. The modern version – “Pics or it didn’t happen” – only reinforces the idea that visual evidence is more honest than verbal or written evidence. Images are intended to represent something that actually exists or once existed, and serve as a memento of a person, place, object or event. Photographic images are supposed to be infallible and real, the promise of early photography revealed. Deepfakes, however, challenge this idea of visual truth by presenting intentionally false video, usually on a platform that can be seen by multiple people. These videos have the potential to skew public discourse and influence elections, much as fake news stories may have done in the 2016 US election.

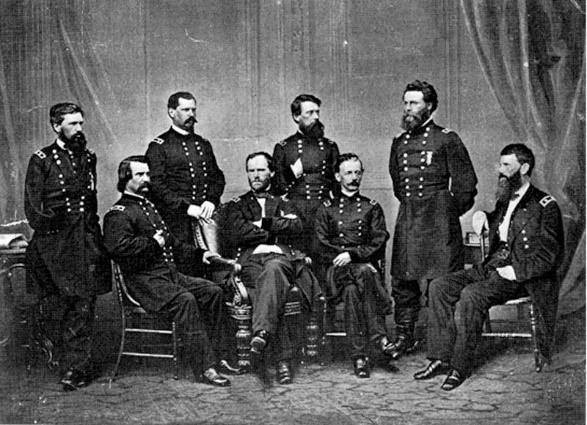

Can you see the alteration in this image by Matthew Brady? The man at the far right, General Francis Blair, was added to the photograph.

Deepfakes are built on techniques first developed for still images. The earliest days of photography introduced tricks and editorial features that allowed photographers to alter images as they saw fit. From spirit photography to forensic science, these image manipulation techniques have served a number of purposes, and most have carried through in some fashion to the present. In that sense, the idea that images are a form of permanent truth is malleable at best. It is so malleable, in fact, that digitally based image evidence in court may or may not be accepted depending on what it depicts.

Is that really Abraham Lincoln resting his hands on the shoulders of his wife? Nope. This is a classic example of spirit photography. (Photograph collection of the Allen County Public Library, Fort Wayne, IN)

Deepfakes are videos that have been altered, usually digitally, in order to deceive. While most Hollywood movies have incorporated digital manipulation of some type since the 1970s, these manipulations are done in service to fictional stories. Deepfake videos, on the other hand, are spread through social media and are intended to look realistic enough to persuade viewers that the person in the video actually said or did what the video depicts. In this video, actor/director Jordan Peele is impersonating president Barack Obama, demonstrating how deepfakes look and showing some of the process by which they are made. Deepfakes, when done well, can be very convincing, even if none of the content is true.

Using computer software, Jordan Peele was able to impersonate Barack Obama in a convincing manner, making it appear that the former President said things he never actually said.

What is being done to combat deepfakes? At the moment, deepfakes are still in their infancy, and technology and cost barriers will ensure that most people will not be able to create them easily. In the face of that, however, a number of solutions are starting to crop up. University of California-Berkeley is developing a series of tools that can help identify deepfake videos. By using algorithms to compare videos, their tools have identified deepfakes up to 96% of the time in tests. News outlets such as the Washington Post and CNN have created guides to understanding and identifying deepfakes. But perhaps the best thing for media and news consumes can do is to keep in mind some basic principles of media literacy. The first: Context is everything. Deepfakes often show famous people doing or saying something that they would never do or say. Even if the video footage is real, it may have been altered to demonstrate a far different message than the original. This is the case with the well-known Nancy Pelosi “cheapfake”, in which a real video of her was altered to make her seen inebriated or ill. Always look up additional information when you are faced with a video presenting information that just doesn’t seem right. Next, double check. If you see a clip of Lord of the Rings where every character is played by Nicolas Cage, you would look up information on that film using a reliable source to see if he was in the film. (Spoiler: he wasn’t.) Last, look at the deepfake itself. At the moment, deepfake videos are not particularly good quality, and image problems tend to occur at the edges of the manipulated areas. If you see mismatched lip synching, fuzzy areas near a person’s temples or overall murky quality, you may be dealing with a deepfake. Also, don’t forget to keep your confirmation bias in check. Just because you want something to be true, even with a video demonstration, doesn’t mean it is.

Interested in misinformation techniques? Want to learn about viral videos? Curious about how mathematics plays a role in identifying fake news and deepfakes? Ask us! iueref@iue.edu